Goal

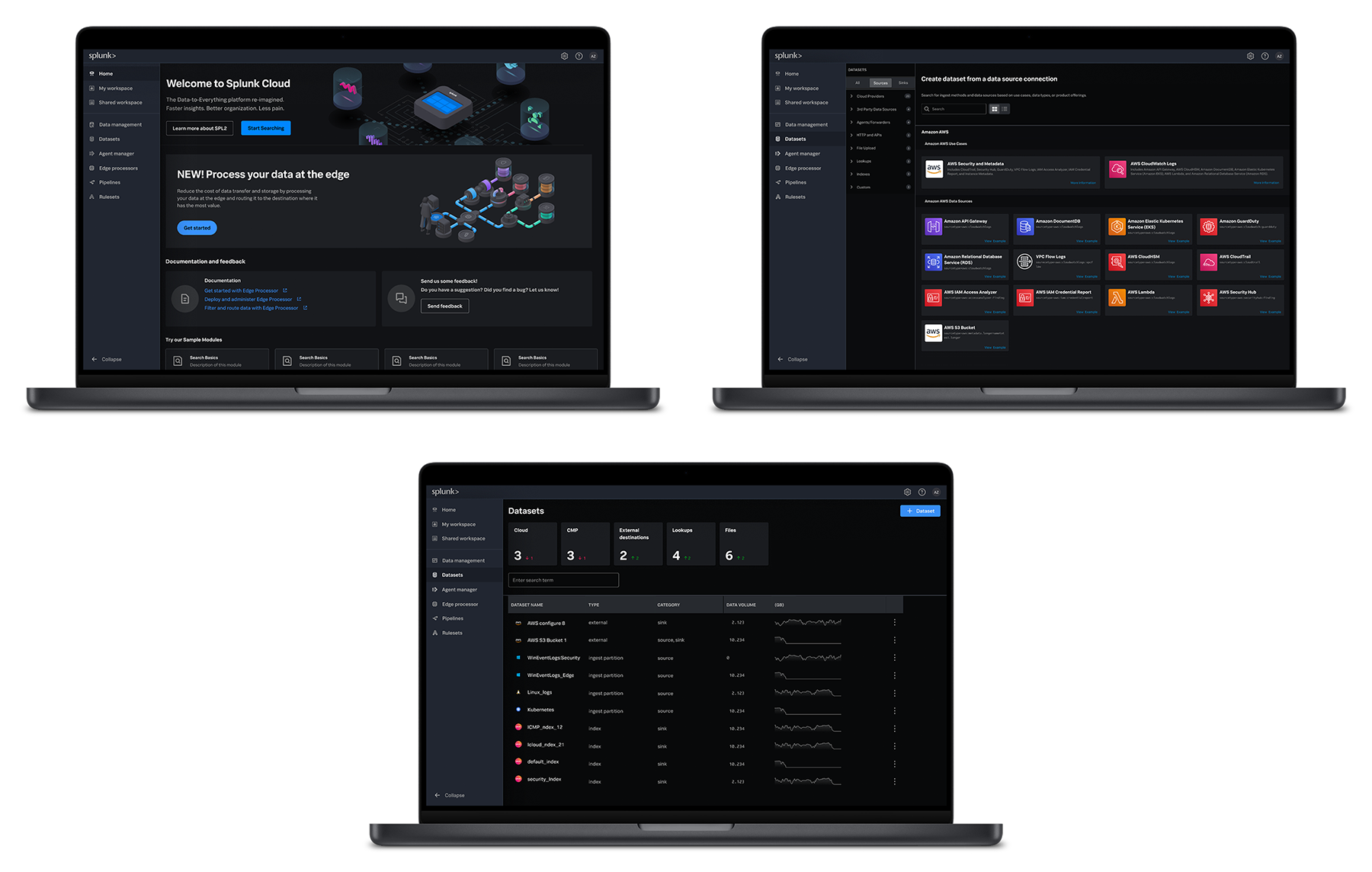

Customers know how to import data easily and control where it goes and exactly what is analyzed, so actionable insights are clearly derived and enable the quickest time to value.

Opportunity

Overcome competitive pressure from nimble upstarts challenging our ability to simplify our customers’ core data ingestion and processing problems to:

- Deliver an expected level of experience simplification correlative with the rest of our product portfolio.

- Rebuild from scratch outdated, legacy workflows without disrupting current customer configurations.

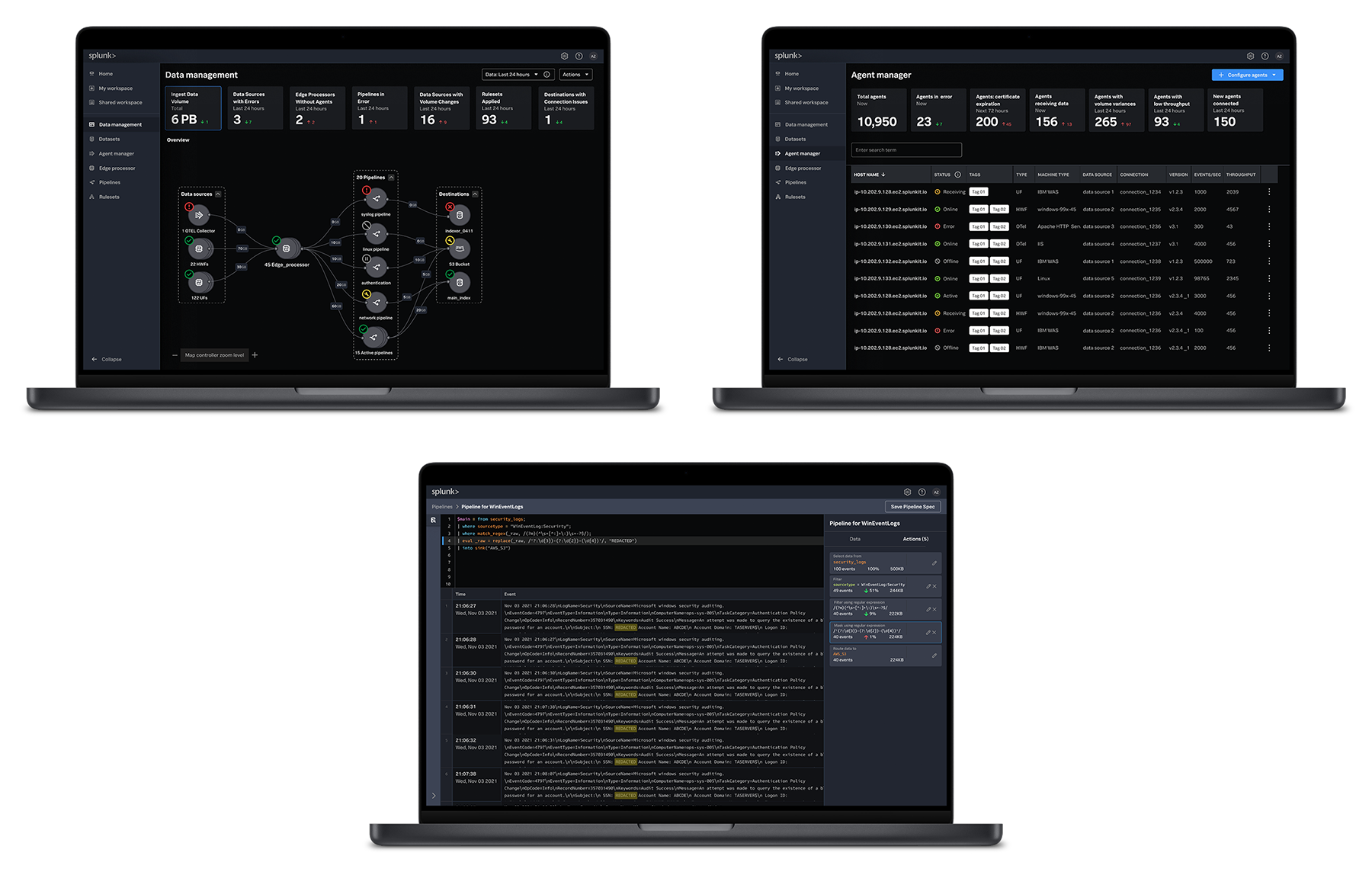

- Create a single, scalable data ingestion model suitable for all data sources and customer use cases.

- Develop an iterative design process capable of reacting quickly to new technical realities, allowing us to be more responsive to market pressures and customer needs.

Solution

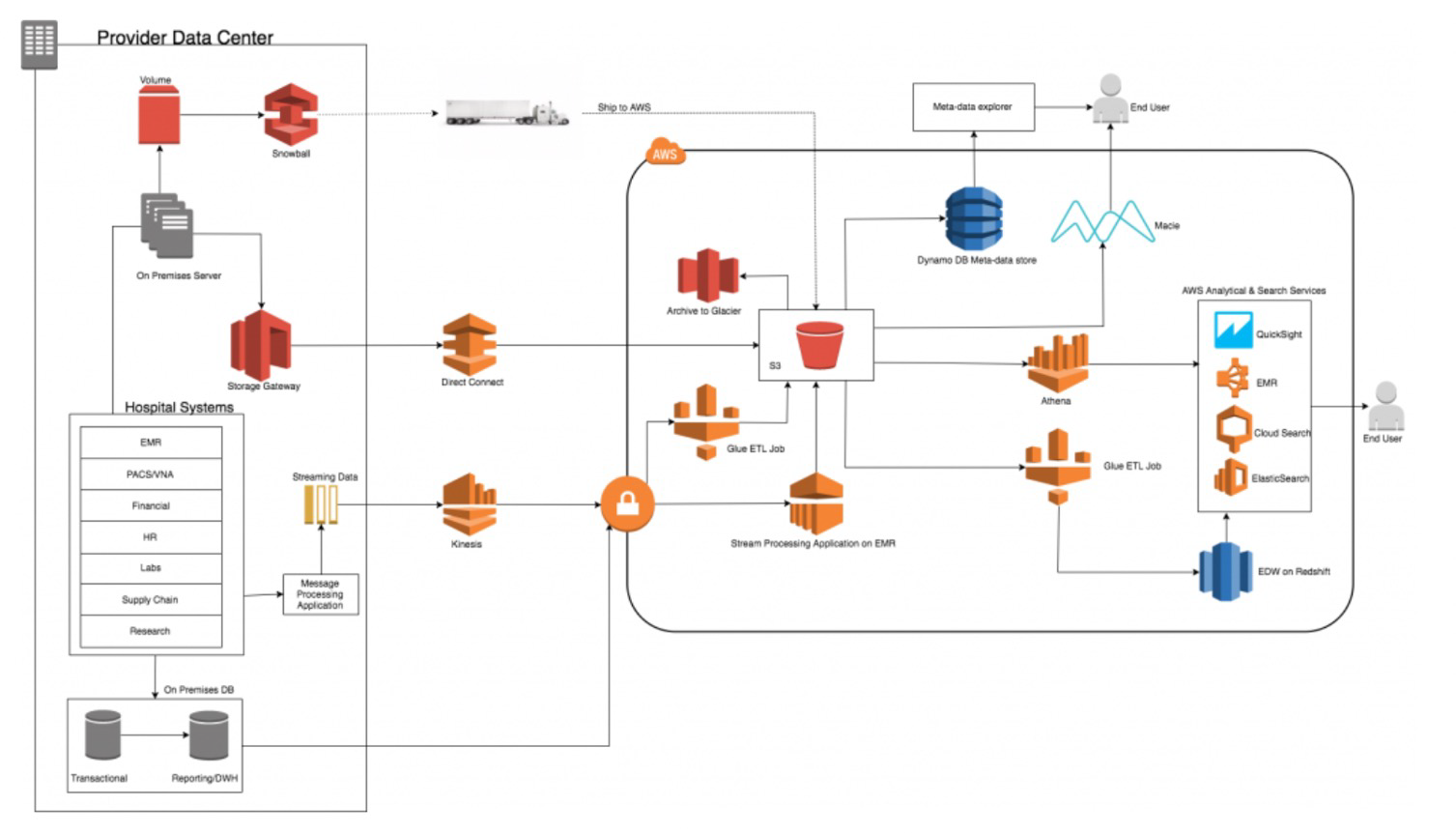

The problem I was facing was twofold, and both were equally urgent. The first issue was stopping the bleeding. We were under immense competitive pressure to address a long-standing customer issue with the complexity of bringing data into our analytics and visualization products. The second problem was longer pole, where customers needed a more scalable and flexible model for expediting data ingestion while giving them tools to be very targeted about the parameters defining the data coming into their environments. Starting with deep customer conversations to help derive the critical pain points, I was able to craft a dual approach where we quickly spun up an iteration on our existing product that addressed the core complexity considerations for some quick wins and to demonstrate our ability to execute while simultaneously leading a focused and nimble team to design a mid to long-term process to solve these problems with a 0 to 1 mindset.

Outcomes

- Delivered an initial set of data ingest and processing features in 2 months, addressing the top customer pain points identified through end-user research, allowing time to develop an end-to-end product vision and rethinking the entire data ingestion process.

- Reduced the time required for customers to set up a data ingestion workflow from over 4 hours to an average of 18 minutes.

- Within three months of the rollout of the initial solution, customers doubled the average number of data processing actions assigned to individual ingest activities from 3 to 6 due to the ease and clarity of the new workflows.

- Included customers throughout the entire ideation, design, and development process to ensure alignment with their needs and expectations, building unprecedented levels of customer anticipation for the launch of the new experience and generating consistent user feedback along the lines of “This is going to kill the competition!”

Accomplishments

- Built a product design process around customer feedback. Design is often criticized for overly relying on intuition instead of metrics as the foundation of its output. In an environment where data analytics is the product, this can lead to distrust and defensiveness among stakeholders. I circumvented these conflicts by implementing a design process focused on frequent, low-touch end-user validation that accelerated overall product development, replacing endless cycles of negotiation with evidence-based decision-making.

- Negotiated parallel strategies that solved short-term problems, buying time for deep product vision work. When the market tells you that your competition is outpacing you, you have no choice but to react. In our case, we had been steadily losing ground to small, nimble upstarts with the luxury of chipping away at our fringes, focusing on single-issue but incredibly significant customer use cases. The belief was that we had to swing for the fences to crush the competition, no matter what it took. I approved of the sentiment but not the approach. My solution was to stem the bleeding with our core customers, delivering interim solutions that reduced any temptation to leave while simultaneously developing a long-term strategy to leapfrog anyone trying to attack us from the fringe. Long story short: it worked.

- Shifted cross-product consistency from an orthodoxy to a framework of coherence focused on end-user needs. The other part of my day job involved driving consistency through the company’s entire product portfolio to achieve universal product alignment. Data ingestion, being the lifeblood of our entire portfolio, was a vital component of this challenge. However, not all data ingestions are created equal. After carefully analyzing all our customers and their use cases, I realized there would never be a one-size-fits-all solution to this problem. Instead, I developed a flexible solution that prioritized coherence over conformity. The best solutions are the ones that can solve more than a single problem.

What I learned

- A design leader’s most valuable skill is making people feel heard. Managing many competing perspectives and opinions can be the biggest challenge to making great products. Engineering owns the tech stack, product makes the business case, while design altruistically represents the voice of the customer. This allows design to be the consensus builder as long as the negotiation process remains equitable and transparent.

- Consistent iterative improvements are the most efficient way to achieve a vision. Too many projects fall apart when ambition outpaces reality. Crafting a product vision should, of course, be bold and challenge existing assumptions. However, achieving it needs to be rational and based on attainable goals to both deliver continuous meaningful product value and use measurable progress as the motivating force behind team success.